By: Weixiang Zhao, Jiahe Guo, Yang Deng, Xingyu Sui, Yulin Hu, Yanyan Zhao, Wanxiang Che, Bing Qin, Tat-Seng Chua, Ting Liu

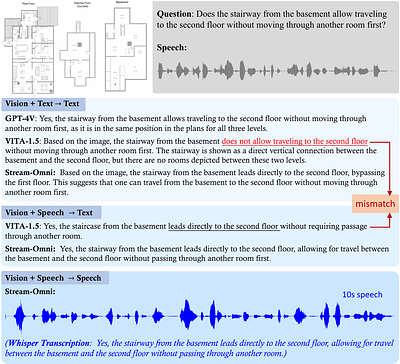

By: Yao Zhang, Chenyang Lin, Shijie Tang, Haokun Chen, Shijie Zhou, Yunpu Ma, Volker Tresp

By: Yining Hong, Rui Sun, Bingxuan Li, Xingcheng Yao, Maxine Wu, Alexander Chien, Da Yin, Ying Nian Wu, Zhecan James Wang, Kai-Wei Chang

By: Daewon Kang, YeongHwan Shin, Doyeon Kim, Kyu-Hwan Jung, Meong Hi Son

GUI-Robust: A Comprehensive Dataset for Testing GUI Agent Robustness in Real-World Anomalies

By: Jingqi Yang, Zhilong Song, Jiawei Chen, Mingli Song, Sheng Zhou, linjun sun, Xiaogang Ouyang, Chun Chen, Can Wang

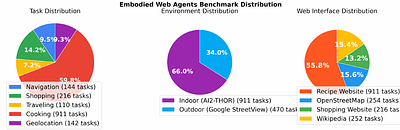

By: Mohammad Hashemi, Andreas Zufle

By: Jiahao Qiu, Xinzhe Juan, Yimin Wang, Ling Yang, Xuan Qi, Tongcheng Zhang, Jiacheng Guo, Yifu Lu, Zixin Yao, Hongru Wang, Shilong Liu, Xun Jiang, Liu Leqi, Mengdi Wang

By: Zhengxiang Cheng, Dongping Chen, Mingyang Fu, Tianyi Zhou

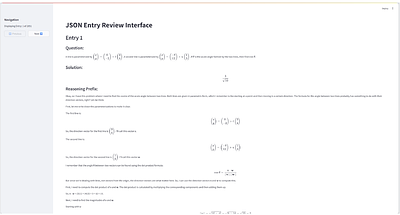

By: Shaolei Zhang, Shoutao Guo, Qingkai Fang, Yan Zhou, Yang Feng

By: Jonah Brown-Cohen, Geoffrey Irving, Georgios Piliouras