RWKV-IF: Efficient and Controllable RNA Inverse Folding via Attention-Free Language Modeling

RWKV-IF: Efficient and Controllable RNA Inverse Folding via Attention-Free Language Modeling

Ji, G.; Xu, K.; Zheng, C.

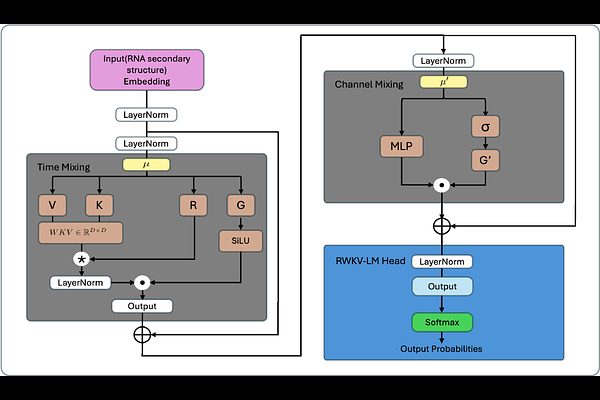

AbstractWe present RWKV-IF, an efficient and controllable framework for RNA inverse folding based on the attention-free RWKV language model. By treating structure-to-sequence generation as a conditional language modeling task, RWKV-IF captures long-range dependencies with linear complexity. We introduce a decoding strategy that integrates Top-k sampling, temperature control, and G-C content biasing to generate sequences that are both structurally accurate and biophysically meaningful. To overcome limitations of existing datasets, we construct a large-scale synthetic training set from randomly generated sequences and demonstrate strong generalization to real-world RNA structures. Experimental results show that RWKV-IF significantly outperforms traditional search-based baselines, achieving higher accuracy and full match rate while greatly reducing edit distance. Our approach highlights the potential of lightweight generative models in RNA design under structural and biochemical constraints.